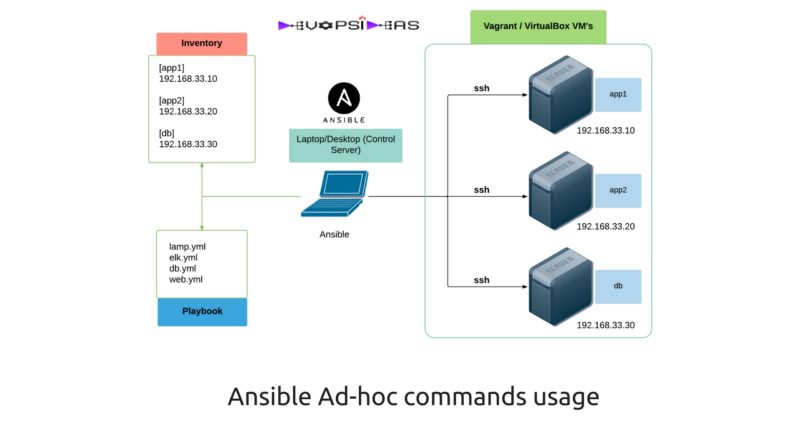

Ansible Ad-hoc commands usage

Need for Ansible Ad-hoc commands

Before getting into Ansible playbooks, we’ll explore Ansible Ad-hoc commands and how it helps to quickly perform common tasks and gather data from one or many servers. For configuration management and deployments, though, you’ll want to pick up on using playbooks – the concepts you will learn here will port over directly to the playbook language.

Since the emergence of cloud and virtualization, the number of servers managed by an individual Administrator has risen dramatically in the past decade. This had made admins to find a way to manage servers in streamlined fashion. On any given day, a systems administrator might do the below tasks.

- Check log files

- Check resource usage

- Manage system users and groups

- Check for patches and apply updates using package managers

- Deploy applications

- Manage cron jobs

- Reboot servers.

Nearly all of these tasks might have already been partially automated but some often needs manual intervention especially when it comes to diagnosing issues in real time. When working with complex multi server environment, logging into each server manually to diagnose is not a workable approach. Ansible allows sys admins to run ad-hoc commands on one or hundreds of machines at the same time using the ‘ansible’ command. You will be able to manage your servers very efficiently even if you decide to ignore the rest of Ansible’s powerful features after reading this article.

Exploring Ad-hoc commands with local infrastructure

We will be exploring the Ansible ad-hoc commands wit the local infrastructure we created in the chapter “Ansible Local Testing: Vagrant and Virtualbox“. To cut through quickly, we created 3 virtual machines using vagrant and virtualbox. We have assigned two servers to act as app and the other server as db ( Just by giving names ).

Important Note: This tutorial‘s examples works fine with Ansible version > 2.0 . Versions prior to 2.0 might require small changes in variable names which will be indicated using comments.

Inventory file

Our inventory file should look as below.

# Application servers [app] 192.168.33.10 192.168.33.20 # Database server [db] 192.168.33.30 # Group 'all' with all servers [all:children] app db # Variables that will be applied to all the servers [all:vars] ansible_user=vagrant ansible_ssh_private_key_file=~/.vagrant.d/insecure_private_key

The content of the hosts file are quite self explanatory. We have created an ‘app’ group containing ip’s of the application servers, a ‘db’ group containing ip address of the DB server and an ‘all’ group which has ip’s of all the servers. Further we have created a variable ‘ansible_user’ for the ‘all’ group which makes it easy for us to pass the commands without having the need to pass the user name every time.

Note: The variable name should be ‘ansible_ssh_user’ for versions prior to Ansible 2.0

If you want to read more on Ansible inventory, refer Ansible Configuration and Inventory files chapter.

Ansible Modules

Before jumping into Ansible Ad-hoc commands, it is important to understand about ansible modules. Ansible ships with a number of modules (called the ‘module library’) that can be executed directly on remote hosts. Users can also write their own modules. These modules can control system resources, like services, packages, or files (anything really), or handle executing system commands. They are the backbone of Ansible.

There are three types of Ansible modules

- Core modules – These are modules that are supported by Ansible itself.

- Extra modules – These are modules that are created by communities or companies but are included in the distribution that may not be supported by Ansible.

- Deprecated modules – These are identified when a new module is going to replace it or a new module is actually more preferred.

Ansible has module documentation installed locally that you can browse. Browse all the available modules in the library by running the below command.

ansible-doc -l

To know more details about a specific module such as parameter names and how to use the module, you can use the below command

ansible-doc <module_name>

You can even get playbook example on how to use a module using the below command.

ansible-doc -s <module_name>

Ansible modules are passed with the flag ‘-m’ to indicate which module to use. For eg,

ansible app -m command -a "/sbin/reboot" ansible app -m shell -a "echo $TERM" ansible app -m copy -a "src=/etc/hosts dest=/tmp/hosts" ansible app -m file -a "dest=/home/devopsideas/a.txt mode=600"

In the examples, we have used modules like command, shell, copy and file to perform various operations.

Tip: The ‘command’ module is the default Ansible module. So it is not required to pass ‘-m command’ explicity when using the command module

Exploring Ansible Ad-hoc commands

In the previous chapter, we ran our first Ansible command to test the server connectivity using ‘ping’ module.

Below things are assumed for this section to make things clear. You can skip this if you are following the tutorials “Ansible Local Testing: Vagrant and Virtualbox” setup.

- It is assumed that the connection between servers are based on ssh keys and not password. In case if you are using password based authentication, then you’ll need to use ‘-k’ flag to prompt for password.

- The user id that is used to connect to the clients is expected to have passwordless sudo access. In case if you have not enabled passwordless access, you need to pass ‘-K’ flag to prompt for sudo password to execute commands requiring sudo access.

- The hosts file path is assumed to be the default location ( /etc/ansible/hosts). If you are using a hosts file located in different location, then you can use ‘ANSIBLE_HOSTS’ environment variable to set the location explicitly. You can even use ‘-i’ flag in the command line to point the location of the host file to be used.

Ping test

Lets start with a ping test and make sure we can connect to our servers.

$ ansible all -m ping

192.168.33.20 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.33.30 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.33.10 | SUCCESS => {

"changed": false,

"ping": "pong"

}

This ping is not the typical ICMP ping. It verifies the ability to login to the server and that a usable python is configured or not. We got the SUCCESS msg for all the servers, so we are good.

Parallel nature of Ansible

Lets make sure the host names are set correct for all the servers.

$ ansible all -a "hostname"

192.168.33.30 | SUCCESS | rc=0 >>

db.dev

192.168.33.10 | SUCCESS | rc=0 >>

app1.dev

192.168.33.20 | SUCCESS | rc=0 >>

app2.devWe can see the host names of the servers in random order. By default ansible runs commands in parallel using multiple process forks. The default value is 5. You can change this based upon server capacity and requirement as described in this chapter

$ ansible all -a "hostname" -f 1

192.168.33.10 | SUCCESS | rc=0 >>

app1.dev

192.168.33.20 | SUCCESS | rc=0 >>

app2.dev

192.168.33.30 | SUCCESS | rc=0 >>

db.dev‘-f’ denotes the number of forks (simultaneous process) to be used. Setting the value to 1 makes it to run in the sequence. It’s fairly rare that you will ever need to do this, but it’s much more frequent that you’ll want to increase the value (like -f 10, or -f 25… depending on how much your system and network connection can handle)

Note: For the above example, we did not specify the command module as “-m command” since that is the default module.

Check Disk space

Now that we have made sure our host names are correct, lets check the disk space of each server.

$ ansible all -a 'df -h'

192.168.33.10 | SUCCESS | rc=0 >>

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00 38G 1.2G 37G 4% /

devtmpfs 107M 0 107M 0% /dev

tmpfs 119M 0 119M 0% /dev/shm

tmpfs 119M 4.4M 115M 4% /run

tmpfs 119M 0 119M 0% /sys/fs/cgroup

/dev/sda2 1014M 89M 926M 9% /boot

tmpfs 24M 0 24M 0% /run/user/1000

192.168.33.20 | SUCCESS | rc=0 >>

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00 38G 1.2G 37G 4% /

devtmpfs 107M 0 107M 0% /dev

tmpfs 119M 0 119M 0% /dev/shm

tmpfs 119M 4.4M 115M 4% /run

tmpfs 119M 0 119M 0% /sys/fs/cgroup

/dev/sda2 1014M 89M 926M 9% /boot

tmpfs 24M 0 24M 0% /run/user/1000

192.168.33.30 | SUCCESS | rc=0 >>

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00 38G 1.2G 37G 4% /

devtmpfs 107M 0 107M 0% /dev

tmpfs 119M 0 119M 0% /dev/shm

tmpfs 119M 4.4M 115M 4% /run

tmpfs 119M 0 119M 0% /sys/fs/cgroup

/dev/sda2 1014M 89M 926M 9% /boot

tmpfs 24M 0 24M 0% /run/user/1000

Check for server memory

$ ansible all -a 'free -m'

192.168.33.30 | SUCCESS | rc=0 >>

total used free shared buff/cache available

Mem: 236 73 20 4 143 129

Swap: 1535 0 1535

192.168.33.10 | SUCCESS | rc=0 >>

total used free shared buff/cache available

Mem: 236 73 19 4 143 129

Swap: 1535 0 1535

192.168.33.20 | SUCCESS | rc=0 >>

total used free shared buff/cache available

Mem: 236 73 20 4 143 129

Swap: 1535 0 1535

Ensure date and time of the server are in sync

$ ansible all -a 'date'

192.168.33.20 | SUCCESS | rc=0 >>

Mon Feb 20 14:50:31 UTC 2017

192.168.33.10 | SUCCESS | rc=0 >>

Mon Feb 20 14:50:31 UTC 2017

192.168.33.30 | SUCCESS | rc=0 >>

Mon Feb 20 14:50:31 UTC 2017

So far we have just checked the status of the servers. Let us make some changes in the servers using various Ansible modules

Install NTP daemon on the servers

$ ansible all -b -m yum -a 'name=ntp state=present'

192.168.33.30 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

...

192.168.33.20 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

"results": [

...

192.168.33.10 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

"results": [

"Loaded plugins:

...

Here we are explicitly mentioning the module name as ‘yum’ since we use CentOS. You can use ‘apt’ for ubuntu servers. Both yum and apt module takes several attributes. In this example we are using the required ones. We are specifying the package name as ‘ntp’ with the name attribute. The state attribute specifies whether the package should be installed or removed ( ‘present’ will install and ‘absent’ will uninstall the package ). The ‘-b’ (–become-user) flag indicates that the command should be run as sudo.

Ensure ntpd daemon is running and set to run on boot

$ ansible all -b -m service -a 'name=ntpd state=started enabled=yes'

192.168.33.20 | SUCCESS => {

"changed": true,

"enabled": true,

"name": "ntpd",

"state": "started"

}

192.168.33.10 | SUCCESS => {

"changed": true,

"enabled": true,

"name": "ntpd",

"state": "started"

}

192.168.33.30 | SUCCESS => {

"changed": true,

"enabled": true,

"name": "ntpd",

"state": "started"

In this example, we are using ‘service’ module with the relevant attributes. enabled attribute adds the service as part of server reboot startup script.

Configure Application and Database Server

Let us assume, our application servers will host a django application along with MariaDB as our database in the DB server.

Firstly we need to make sure that Django and its dependencies are installed. Django is not in the official CentOS yum repository, but we can install it using python’s easy_install.

Application configuration

First we need to install the dependent packages MysQL-python and python-setuptools package in order to install django

Step1: Install MySQL-python using yum module in the

$ ansible app -b -m yum -a "name=MySQL-python state=present"

192.168.33.10 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

"results": [

"Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile\n

...

192.168.33.20 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

"results": [

"Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile\n * base:

...

Step2: Install python-setuptools using yum module

$ ansible app -b -m yum -a "name=python-setuptools state=present"

192.168.33.20 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

"results": [

"Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile\n * base:

...

192.168.33.10 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

"results": [

"Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile\n

...

Step3: Install django using easy_install module

$ ansible app -b -m easy_install -a "name=django"

192.168.33.20 | SUCCESS => {

"binary": "/bin/easy_install",

"changed": true,

"name": "django",

"virtualenv": null

}

192.168.33.10 | SUCCESS => {

"binary": "/bin/easy_install",

"changed": true,

"name": "django",

"virtualenv": null

}

Step4: Make sure Django is installed and working correctly

$ ansible app -a "python -c 'import django; print django.get_version()'"

192.168.33.20 | SUCCESS | rc=0 >>

1.10.5

192.168.33.10 | SUCCESS | rc=0 >>

1.10.5

Database configuration

We configured the application servers using the app group defined in Ansible’s main inventory, and we can configure the database server (currently the only server in the db group) using the similarly- defined db group.

Let’s install MariaDB, start it, and configure the server’s firewall to allow access on MariaDB’s default port, 3306

Step1: Install Mariadb using yum plugin

$ ansible db -b -m yum -a "name=mariadb-server state=present"

192.168.33.30 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

"results": [

"Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile\n

...

Step 2: Start and enable mariadb service

$ ansible db -b -m service -a "name=mariadb state=started enabled=yes"

192.168.33.30 | SUCCESS => {

"changed": true,

"enabled": true,

"name": "mariadb",

"state": "started"

}

Step3: Flush existing iptable rules and set a new rule to allow access to DB from Application Server.

$ ansible db -b -m service -a "name=mariadb state=started enabled=yes"

192.168.33.30 | SUCCESS => {

"changed": true,

"enabled": true,

"name": "mariadb",

"state": "started"

}

$ ansible db -b -a "iptables -A INPUT -s 192.168.33.0/24 -p tcp -m tcp --dport 3306 -j ACCEPT"

192.168.33.30 | SUCCESS | rc=0 >>

Step4: Install MySQL-python package

$ ansible db -b -m yum -a "name=MySQL-python state=present"

192.168.33.30 | SUCCESS => {

"changed": true,

"msg": "",

"rc": 0,

"results": [

"Loaded plugins: fastestmirror\nLoading mirror speeds from cached hostfile

...

Step5: Create a mysql user and password to access the database using mysql_user module.

$ ansible db -s -m mysql_user -a "name=django host=% password=121212 priv=*.*:ALL state=present"

192.168.33.30 | SUCCESS => {

"changed": true,

"user": "django"

}

We have successfully configured the servers to host our hypothetical web application. We just did this without logging into the servers and just by executing few commands. By this time, you might have realized the simplicity and power of Ansible.

Make changes to just one server

Assume that the ntpd service in one of the Application server got crashed and stopped. You need to verify in which server the service got stopped and restart it on that specific server.

Step 1: Check the status of the service in the app group of the inventory file

$ ansible app -s -a "service ntpd status"

192.168.33.10 | SUCCESS | rc=0 >>

● ntpd.service - Network Time Service

Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2017-02-20 15:47:18 UTC; 2h 0min ago

...

192.168.33.20 | FAILED | rc=3 >>

● ntpd.service - Network Time Service

Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled)

Active: inactive (dead) since Mon 2017-02-20 17:48:09 UTC; 6s ago

Process: 2884 ExecStart=/usr/sbin/ntpd -u ntp:ntp $OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 2885 (code=exited, status=0/SUCCESS)

From the command output, we can see the ntpd service in 192.168.33.30 (app2.dev) is stopped (I have manually stopped just for our test )

Step2: Restart the service only in the affected server using –limit option.

$ ansible app -s -a "service ntpd restart" --limit 192.168.33.20

192.168.33.20 | SUCCESS | rc=0 >>

Redirecting to /bin/systemctl restart ntpd.service–limit (-l) flag helps to limit the server where the command/playbook should be executed.

The –limit flag supports wildcard and regex. When using regular expression, it has to be prefixed with ‘~’.

For example,

# Limit hosts with a simple pattern (asterisk is a wildcard).

$ ansible app -s -a "service ntpd restart" --limit *.20

Limit hosts with a regular expression (prefix with a tilde).

$ ansible app -s -a "service ntpd restart" --limit ~".*\.20"

Manage Users and Groups

Ansible’s user and group module makes things simple for managing users and groups. Lets add an admin group for all the servers in our inventory.

$ ansible all -b -m group -a "name=admin state=present"

192.168.33.10 | SUCCESS => {

"changed": true,

"gid": 1001,

"name": "admin",

"state": "present",

"system": false

}

192.168.33.20 | SUCCESS => {

"changed": true,

"gid": 1001,

"name": "admin",

"state": "present",

"system": false

}

192.168.33.30 | SUCCESS => {

"changed": true,

"gid": 1001,

"name": "admin",

"state": "present",

"system": false

}

There are various options you can set in relation to group module. You can set group id with ‘gid=[gid]‘ and can even indicate the group is a system group with ‘system=yes‘ option.

Now that we have created a group for admin, Let us add a user ‘devopsadmin’ to the group.

$ ansible all -b -m user -a "name=devopsadmin group=admin createhome=yes"

192.168.33.20 | SUCCESS => {

"changed": true,

"comment": "",

"createhome": true,

"group": 1001,

"home": "/home/devopsadmin",

"name": "devopsadmin",

"shell": "/bin/bash",

"state": "present",

"system": false,

"uid": 1001

}

192.168.33.10 | SUCCESS => {

"changed": true,

"comment": "",

"createhome": true,

"group": 1001,

"home": "/home/devopsadmin",

"name": "devopsadmin",

"shell": "/bin/bash",

"state": "present",

"system": false,

"uid": 1001

}

192.168.33.30 | SUCCESS => {

"changed": true,

"comment": "",

"createhome": true,

"group": 1001,

"home": "/home/devopsadmin",

"name": "devopsadmin",

"shell": "/bin/bash",

"state": "present",

"system": false,

"uid": 1001

}

The ‘createhome=yes’ ensures that a home directory gets created for the user. You can even create an ssh key for the user with ‘generate_ssh_key=yes‘ parameter.

Run the command again with ‘generate_ssh_key parameter’ parameter to create ssh key for the user devopsadmin.

192.168.33.10 | SUCCESS => {

"append": false,

"changed": true,

"comment": "",

"group": 1001,

"home": "/home/devopsadmin",

"move_home": false,

"name": "devopsadmin",

"shell": "/bin/bash",

"ssh_fingerprint": "2048 93:aa:8d:13:19:eb:d0:84:b1:88:95:9f:e7:83:20:f1 ansible-generated on app1.dev (RSA)",

"ssh_key_file": "/home/devopsadmin/.ssh/id_rsa",

"ssh_public_key": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCmILbQIPW0USn/UN1TvTw3qPVvLeKoMY+lUCdGO7MauoM1KOYF3SCUBVtV8d7SdvlSh6iojSJpoWMAmlbJh8HrSHzcJCDsAINZZHumiqRZX5hfpopMUOns+1Z786PgMc3QY/dZ+rENngkH3k92D5mlEFhYutzvBVpe/VBF+e5fAJdXvmKDq/jXPd2BBaojAtxR8SxyahUI8+XHZNDSxHRuFe0dSQo6DrnVv7K8bOg/c5qGWzbQOYqQs4g6mMF538uPnl9fIy4twpgj/kmJCAGuxlQszJw0IrsRCrOX42mNZbui58R6Hhod8v+TohPAoy/t1OGC43awxJIXoNqNzoA/ ansible-generated on app1.dev",

"state": "present",

"uid": 1001

}

192.168.33.20 | SUCCESS => {

"append": false,

"changed": true,

"comment": "",

"group": 1001,

"home": "/home/devopsadmin",

"move_home": false,

"name": "devopsadmin",

"shell": "/bin/bash",

"ssh_fingerprint": "2048 c7:5d:18:7a:16:6e:ff:24:62:de:93:46:b8:bb:dd:2d ansible-generated on app2.dev (RSA)",

"ssh_key_file": "/home/devopsadmin/.ssh/id_rsa",

"ssh_public_key": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDZForWGXnjvw9SRbc1VkDrVSzffcG7C5blc3ecFHFVeoZBJ4TGSfvFXkBjNQNB4PaToT5LSKIkVaLFBcs2CsdQ4vPj+TKtWu9/z3glo+VJoyzTlRm0s/vH6gYS1BvOSoS4S8bIAoJA8DElpYsnnsv0/EBLWRq2EA3CmKFzaRIsbVGudC8fVDACVwauHemnm/9yy3E/v6B80R31/UcGSSvhri5bC+h21O5Zg0lbLhbcYqUd4tL7Ror9J7U73JdanTnHtyAS93oAr2jcx5qGNsmVjpJ1U20eDJ6xSr8vy31UKPOXFwdXoefnV/quQIEfeQhL/DYxGHr8n1xzCEVYF10F ansible-generated on app2.dev",

"state": "present",

"uid": 1001

}

192.168.33.30 | SUCCESS => {

"append": false,

"changed": true,

"comment": "",

"group": 1001,

"home": "/home/devopsadmin",

"move_home": false,

"name": "devopsadmin",

"shell": "/bin/bash",

"ssh_fingerprint": "2048 5e:a1:c3:c7:a8:00:67:ae:78:13:75:79:31:90:c8:ef ansible-generated on db.dev (RSA)",

"ssh_key_file": "/home/devopsadmin/.ssh/id_rsa",

"ssh_public_key": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDRltKm+dsxJq7cIfi8QPJvSshrmK7zaob9z9abUkJOb4T/o0dQ85Yy1cKDxrRMJbfPV6IPrhHqGDam1B49GoSAu8fv5wR8XODq7XUmASUJHzSBzM/XbHhQDHDcczNhxsf6RitfojxG5SlPW6squBLBL/2tFLW7RHquAurq9D3e8XFkrFKONsBjq4x+PAexEurLeLfriA9fFzyc737kNF6dcGpJfej8P2QadvkY3vOx5rqUhNR9oHZnRftHt3wK7mg31lsr5BWjSnHoYx/YEavVWdOQAYCqwW30N9Yxk8jEAIK3O69QAAX7AR+VFWdq6fR2aCKii9dnUuNfhp//Vdrj ansible-generated on db.dev",

"state": "present",

"uid": 1001

}

We can see that the keys got created for devopsadmin and we also got the content of the public key. This can further be used to automate key based login between the servers if needed.

Lets’ say we want to revoke access for devopsadmin in our DB server. We can do that by running the below command.

$ ansible db -b -m user -a "name=devopsadmin state=absent remove=yes"

192.168.33.30 | SUCCESS => {

"changed": true,

"force": false,

"name": "devopsadmin",

"remove": true,

"state": "absent"

}

Manage files and directories

Another common use for ad-hoc commands is remote file management. Ansible makes it easy to copy files from your host to remote servers, create directories, manage file and directory permissions and ownership, and delete files or directories.

Copy files to servers

You can use either copy or synchronize module to copy files and directories to remote servers.

$ ansible db -m copy -a "src=test.txt dest=/tmp/"

192.168.33.30 | SUCCESS => {

"changed": true,

"checksum": "da39a3ee5e6b4b0d3255bfef95601890afd80709",

"dest": "/tmp/test.txt",

"gid": 1000,

"group": "vagrant",

"md5sum": "d41d8cd98f00b204e9800998ecf8427e",

"mode": "0664",

"owner": "vagrant",

"secontext": "unconfined_u:object_r:user_home_t:s0",

"size": 0,

"src": "/home/vagrant/.ansible/tmp/ansible-tmp-1487846240.68-117216171000195/source",

"state": "file",

"uid": 1000

}

The src can be a file or a directory. If you include a trailing slash, only the contents of the directory will be copied into the dest. If you omit the trailing slash, the contents and the directory itself will be copied into the dest.

The copy module is perfect for single-file copies, and works very well with small directories. When you want to copy hundreds of files, especially in very deeply-nested directory structures, you should consider either copying then expanding an archive of the files with Ansible’s unarchive module, or using Ansible’s synchronize module.

$ ansible db -m synchronize -a "src=test.txt dest=/tmp/host.txt"

192.168.33.30 | SUCCESS => {

"changed": true,

"cmd": "/usr/bin/rsync --delay-updates -F --compress --archive --rsh '/usr/bin/ssh -i /Users/devopsideas/.vagrant.d/insecure_private_key -S none -o StrictHostKeyChecking=no' --out-format='<<CHANGED>>%i %n%L' \"/Users/devopsideas/Ansible-Tutorial/test.txt\" \"[email protected]:/tmp/host.txt\"",

"msg": "<f+++++++ test.txt\n",

"rc": 0,

"stdout_lines": [

"<f+++++++ test.txt"

]

}

Retrieve file from the servers

The fetch module can be used, the same way as copy module. The difference is that, the files will be copied down to the local dest in a directory structure that matches the host from which you copied them. For example, use the following command to grab the hosts file from the servers.

$ ansible all -s -m fetch -a "src=/etc/hosts dest=/Users/devopsideas/Ansible-Tutorial/"

192.168.33.20 | SUCCESS => {

"changed": true,

"checksum": "562116c2ecb4fff34f16acdadf32b65f8aa7c5d4",

"dest": "/Users/devopsideas/Ansible-Tutorial/192.168.33.20/etc/hosts",

"md5sum": "c2e131778ff9dfd49dee5de231570556",

"remote_checksum": "562116c2ecb4fff34f16acdadf32b65f8aa7c5d4",

"remote_md5sum": null

}

192.168.33.30 | SUCCESS => {

"changed": true,

"checksum": "a71c6a8ffd0ff6560272f521cff604ed5a1425b1",

"dest": "/Users/devopsideas/Ansible-Tutorial/192.168.33.30/etc/hosts",

"md5sum": "66e108d30f146ce20551fdc9c7d6833c",

"remote_checksum": "a71c6a8ffd0ff6560272f521cff604ed5a1425b1",

"remote_md5sum": null

}

192.168.33.10 | SUCCESS => {

"changed": true,

"checksum": "68dbe09e68a1c33a1755507b7e5b529c9ba6b645",

"dest": "/Users/devopsideas/Ansible-Tutorial/192.168.33.10/etc/hosts",

"md5sum": "93279ad1e91b4df5212d5165882929e3",

"remote_checksum": "68dbe09e68a1c33a1755507b7e5b529c9ba6b645",

"remote_md5sum": null

}

Fetch will, by default, put the /etc/hosts file from each server into a folder in the destination with the name of the host (in our case, the three IP addresses), then in the location defined by src. So, the db server’s hosts file will end up in /Users/devopsideas/Ansible-Tutorial/192.168.33.30/etc/hosts.

$ pwd

/Users/devopsideas/Ansible-Tutorial

$ ls -ltr

total 8

-rw-r--r-- 1 devopsideas staff 960 Feb 20 14:59 Vagrantfile

-rw-r--r-- 1 devopsideas staff 0 Feb 23 16:05 test.txt

drwxr-xr-x 3 devopsideas staff 102 Feb 23 16:22 192.168.33.30

drwxr-xr-x 3 devopsideas staff 102 Feb 23 16:22 192.168.33.20

drwxr-xr-x 3 devopsideas staff 102 Feb 23 16:22 192.168.33.10

Create directories and files

You can use the file module to create files and directories (like touch), manage permissions and ownership on files and directories, modify SELinux properties, and create symlinks.

Here’s how to create a directory

$ ansible db -m file -a "dest=/tmp/test mode=644 state=directory"

192.168.33.30 | SUCCESS => {

"changed": true,

"gid": 1000,

"group": "vagrant",

"mode": "0644",

"owner": "vagrant",

"path": "/tmp/test",

"secontext": "unconfined_u:object_r:user_tmp_t:s0",

"size": 6,

"state": "directory",

"uid": 1000

}

We can also create symbolic links as below,

$ ansible db -b -m file -a "src=/tmp/hosts dest=/etc/hosts owner=root group=root state=link force=yes"

192.168.33.30 | SUCCESS => {

"changed": true,

"dest": "/etc/hosts",

"src": "/tmp/hosts",

"state": "absent"

}

The ‘set=link‘ parameter is used to create symlinks. We have used ‘force=yes‘ to create the file in the source if it doesn’t exist.

Deleting directories and files

Let’s remove the test files and directories that we created in the previous steps since we don’t need it anymore.

$ ansible db -m file -a "dest=/tmp/test.txt state=absent"

192.168.33.30 | SUCCESS => {

"changed": true,

"path": "/tmp/test.txt",

"state": "absent"

}

$ ansible db -m file -a "dest=/tmp/host.txt state=absent"

192.168.33.30 | SUCCESS => {

"changed": true,

"path": "/tmp/host.txt",

"state": "absent"

}

$ ansible db -m file -a "dest=/tmp/hosts state=absent"

192.168.33.30 | SUCCESS => {

"changed": false,

"path": "/tmp/hosts",

"state": "absent"

}

Run operations in the background

Some operations take quite a while (minutes or even hours). For example, when you run yum update or apt-get update && apt-get dist-upgrade, it could be a few minutes before all the packages on your servers are updated.

In these situations, you can tell Ansible to run the commands asynchronously, and poll the servers to see when the commands finish. When you’re only managing one server, this is not really helpful, but if you have many servers, Ansible starts the command very quickly on all your servers (especially if you set a higher –forks value), then polls the servers for status until they’re all up to date.

To run a command in the background, you set the following options:

- -B <seconds>: the maximum amount of time (in seconds) to let the job run.

- -P <seconds>: the amount of time (in seconds) to wait between polling the servers for an

updated job status.

$ ansible -b app -B 3600 -a "yum -y update"

Deploy version controlled application

For simple application deployments, where you may need to update a git checkout, or copy a new bit of code to a group of servers, then run a command to finish the deployment, Ansible’s ad-hoc mode can help. For more complicated deployment, we need to follow a different approach, which we will see in later section.

$ ansible app -b -m git -a "repo=git://example.com/path/to/repo.git dest=/opt/myapp update=yes version=1.2.3"Ansible’s git module lets you specify a branch, tag, or even a specific commit with the version parameter . To force Ansible to update the checked-out copy, we passed in update=yes. The repo and dest options should be self-explanatory.

Then, run the application’s update.sh shell script if that’s part of your deployment.

$ ansible app -s -a "/opt/myapp/update.sh"

Conclusion

In this section, we have configured, monitored, and managed the infrastructure without ever logging in to an individual server. You also learned how Ansible connects to remote servers, and how to use the ansible command to perform tasks on many servers quickly in parallel, or one by one.

This should have given you a nice overview and by now, you should be getting familiar with the basics of Ansible, and you should be able to start managing your own infrastructure more efficiently.

In the next section, we will learn how to create Ansible Playbooks and deep dive and experience the real power of Ansible.